Holt-Winters Method and Northam Temperature Data

Triple Exponential Smoothing, or the Holt-Winters method, is a forecasting technique that can capture both the underlying trend of a time series as well as a seasonal component. For example, a retail store may see consistently higher sales on weekends compared to weekdays (seasonal component) and a month-on-month increase due to their excellent marketing campaigns throughout the year (trend component).

We'll first run through a simple example showing how to perform Triple Exponential Smoothing, before implementing it in Python and testing it on a temperature dataset from the Bureau of Meteorology.

An Easy Example

First, let's have a look at the quarterly sales figures for ficticious manufacturer of Karaoke machines.

import numpy as np

import matplotlib.pyplot as plt

example = np.array([26,28,35,36,31,33,37,40,35,39,42,43])

x_ticks = [f'{yr}-{q}' for yr in '2019 2020 2021'.split()

for q in 'Q1 Q2 Q3 Q4'.split()]

plt.figure(figsize=(12,4))

plt.xticks(np.arange(12), x_ticks)

plt.ylabel('Karoke Machine Sales')

plt.yticks(np.arange(example.min(), example.max(), 2))

plt.plot(example)

plt.show()

There is an obvious upwards trend to the series - so business is good! A closer look reveals a peak at Q4 each year which then drops to a low at Q1. We can add a trend-line through the series and visualise this seasonal pattern.

x = np.arange(1,13)

trend = 1.2*x + 27

plt.figure(figsize=(12,4))

plt.xticks(np.arange(12), x_ticks)

plt.ylabel('Karoke Machine Sales')

plt.yticks(np.arange(example.min(), example.max(), 2))

plt.plot(example)

plt.plot(trend, linestyle='--')

for i in range(len(example)):

y = trend[i]

dy = example[i] - trend[i]

plt.arrow(i, y, 0, dy,

length_includes_head=True,

width=.04,

head_width=.2,

color='black')

plt.show()

There is a consistent increase in sales above the trend-line for Q3/Q4, which then falls below it in Q1/Q2. The business selling these Karaoke machines may want to know how many units it needs to stock in the next quarter, 2022 Q1. Intuition would tell us to continue the trendline up and take off around 2 or 3 units to account for the expected drop in Q1. This is the essence of Triple Exponential Smoothing.

Level, Trend and Seasonal Components

The three components a series is broken down in to are the level ($\ell$), trend ($b$) and seasonality ($s$). The DES tutorial covered the level and trend, so we'll now just need to add a seasonal component.

Let's look at the first two seasonal cycles from the Karoke machine sales and set up a table.

| QUARTER | TIME (t) | SALES ($y_t$) | LEVEL ($\ell_t$) | TREND ($b_t$) | SEASONAL($s_t$) | SMOOTHED ($\hat{y}$) |

|---|---|---|---|---|---|---|

| Q1 | 1 | 26 | ||||

| Q2 | 2 | 28 | ||||

| Q3 | 3 | 35 | ||||

| Q4 | 4 | 36 | ||||

| Q1 | 5 | 31 | ||||

| Q2 | 6 | 33 | ||||

| Q3 | 7 | 37 | ||||

| Q4 | 8 | 40 |

The first step is to initialise starting values for the three components. To do this, we'll also introduce another term, $m$, which will be the seasonal period. In the case of our quarterly sales figures, we have four steps in each seasonal cycle, so $m=4$.

A quick note that Triple Exponential Smoothing can be either "additive" or "multiplicative". The additive version looks at absolute differences, eg last quarter's sales were 2 units higher. The multiplicative version looks at percentage changes, eg last quarter's sales were 5% higher. We'll begin with the additive version.

Initial Level: This is going to be the mean of the first cycle. Sum the first four values for $y$ and divide by $m$.

$$\begin{aligned} \ell_{1} &= \frac{\sum_{t=1}^m y_t}{m} \\ &= \frac{26+28+35+36}{4} \\ &= 31.25 \end{aligned}$$Initial Trend: This is the difference between the mean of the first and second cycles, divided by how many steps are in that cycle. You can think of this as the gradient between the first two cycles.

$$\begin{aligned} b_{1} &= \frac{\sum_{t=m+1}^{2m} y_t - \sum_{t=1}^m y_t}{m^2} \\ &= \frac{(31+33+37+40)-(26+28+35+36)}{4^2} \\ &= 1.00 \end{aligned}$$Initial Seasonality: There will be four initial seasonal components, one for each quarter. These values will be the difference between $y_t$ and the initial level that was just calculated (31.25).

$$\begin{aligned} s_{1} &= y_1 - \ell_{1} \\ &= 26 - 31.25 \\ &= -5.25 \\ s_{2} &= y_2 - \ell_{1} \\ &= 28 - 31.25 \\ &= -3.25 \\ ... \end{aligned}$$The initial values can now be entered in to the table. Just a note that you will often see the initial values for the level and trend entered at t=4. But, just like in the DES tutorial, we're going to change the frame of reference so that it will allow for a more computationally efficient implementation in Python. So the initial level and trend will be entered at t=1.

| QUARTER | TIME (t) | SALES ($y_t$) | LEVEL ($\ell_t$) | TREND ($b_t$) | SEASONAL($s_t$) | SMOOTHED ($\hat{y}$) |

|---|---|---|---|---|---|---|

| Q1 | 1 | 26 | 31.25 | 1.00 | -5.25 | |

| Q2 | 2 | 28 | -3.25 | |||

| Q3 | 3 | 35 | 3.75 | |||

| Q4 | 4 | 36 | 4.75 | |||

| Q1 | 5 | 31 | ||||

| Q2 | 6 | 33 | ||||

| Q3 | 7 | 37 | ||||

| Q4 | 8 | 40 |

Introducing the Smoothing Factors

To work our way through the exponential smoothing process, we need to bring in the smoothing factors. The three components each have their own smoothing factor, denoted by $\alpha$, $\beta$ and $\gamma$ for level, trend and seasonality respectively. The values for the smoothing factors must fall between 0 and 1. To start off with, let's set $\alpha=0.3$, $\beta=0.2$ and $\gamma=0.1$.

The formulas for updating the level, trend and seasonal components are given by:

$$\begin{aligned} \ell_t &= \alpha (y_{t-1} - s_{t-1}) + (1 - \alpha)(\ell_{t-1} + b_{t-1}) \\ b_t &= \beta(\ell_t - \ell_{t-1}) + (1 - \beta)b_{t-1} \\ s_{t+m-1} &= \gamma(y_{t-1} - \ell_{t-1} - b_{t-1}) + (1 - \gamma)s_{t-1} \end{aligned}$$When we go ahead and plug in the numbers, we get

$$\begin{aligned} \ell_2 &= 0.3 (26 + 5.25) + (1 - 0.3)(31.25 + 1) \\ \ell_2 &= 31.95 \\ \\ b_2 &= 0.2(31.95 - 31.25) + (1 - 0.2)(1.00) \\ b_2 &= 0.94 \\ \\ s_5 &= 0.1(26 - 31.25 - 1) + (1 - 0.1)(-5.25) \\ s_5 &= -5.35 \end{aligned}$$We can go ahead and repeat this process for the rest of the table.

| QUARTER | TIME (t) | SALES ($y_t$) | LEVEL ($\ell_t$) | TREND ($b_t$) | SEASONAL($s_t$) | SMOOTHED ($\hat{y}$) |

|---|---|---|---|---|---|---|

| Q1 | 1 | 26 | 31.25 | 1.00 | -5.25 | |

| Q2 | 2 | 28 | 31.95 | 0.94 | -3.25 | |

| Q3 | 3 | 35 | 32.40 | 0.84 | 3.75 | |

| Q4 | 4 | 36 | 32.64 | 0.72 | 4.75 | |

| Q1 | 5 | 31 | 32.73 | 0.60 | -5.35 | |

| Q2 | 6 | 33 | 34.23 | 0.78 | -3.41 | |

| Q3 | 7 | 37 | 35.43 | 0.86 | 3.55 | |

| Q4 | 8 | 40 | 35.44 | 0.69 | 4.54 | |

| Q1 | 9 | 35 | 35.93 | 0.65 | -5.05 | |

| Q2 | 10 | 39 | 37.62 | 0.86 | -3.27 | |

| Q3 | 11 | 42 | 39.62 | 1.09 | 3.27 | |

| Q4 | 12 | 43 | 40.11 | 0.97 | 4.47 | |

| Q1 | 13 | 40.31 | 0.81 | -4.70 | ||

| Q2 | 14 | -2.89 | ||||

| Q3 | 15 | 3.07 | ||||

| Q4 | 16 | 4.22 |

Note that the example data ends at t=12, but we have values for the seasonal components up to t=16.

Making a Forecast

Now that we have our level, trend and seasonal components calculated, we can combine these together to construct our smoothed series. This is simply going to be,

$$\hat{y}_t = \ell_t + b_t + s_t$$So to get $\hat{y}_1$, we calculate

$$\begin{aligned} \hat{y}_1 &= \ell_1 + b_1 + s_1 \\ &= 31.25 + 1.00 - 5.25 \\ &= 27.00 \end{aligned}$$This can be repeated all the way up to and including t=12.

To make a forecast into the future, we take the last value we have for the level, add h-steps of the gradient and then make the seasonal adjustment depending on which quarter we are forecasting.

$$\hat{y}_{t+h} = \ell_{t+1} + h*b_{t+1} + s_{t+[(h-1) mod m] + 1}$$For example, to get the forecast for the last row in the table (t=16), we would calculate

$$\begin{aligned} \hat{y}_{16} &= \ell_{13} + 4*b_{13} + s_{16} \\ &= 40.31 + 4*0.81 + 4.22 \\ &= 47.79 \end{aligned}$$| QUARTER | TIME (t) | SALES ($y_t$) | LEVEL ($\ell_t$) | TREND ($b_t$) | SEASONAL($s_t$) | SMOOTHED ($\hat{y}$) |

|---|---|---|---|---|---|---|

| Q1 | 1 | 26 | 31.25 | 1.00 | -5.25 | 27.00 |

| Q2 | 2 | 28 | 31.95 | 0.94 | -3.25 | 29.64 |

| Q3 | 3 | 35 | 32.40 | 0.84 | 3.75 | 36.99 |

| Q4 | 4 | 36 | 32.64 | 0.72 | 4.75 | 38.11 |

| Q1 | 5 | 31 | 32.73 | 0.60 | -5.35 | 27.98 |

| Q2 | 6 | 33 | 34.23 | 0.78 | -3.41 | 31.60 |

| Q3 | 7 | 37 | 35.43 | 0.86 | 3.55 | 39.84 |

| Q4 | 8 | 40 | 35.44 | 0.69 | 4.54 | 40.67 |

| Q1 | 9 | 35 | 35.93 | 0.65 | -5.05 | 31.53 |

| Q2 | 10 | 39 | 37.62 | 0.86 | -3.27 | 35.20 |

| Q3 | 11 | 42 | 39.62 | 1.09 | 3.27 | 43.97 |

| Q4 | 12 | 43 | 40.11 | 0.97 | 4.47 | 45.55 |

| Q1 | 13 | 40.31 | 0.81 | -4.70 | 36.43 | |

| Q2 | 14 | -2.89 | 39.05 | |||

| Q3 | 15 | 3.07 | 45.83 | |||

| Q4 | 16 | 4.22 | 47.79 |

Triple Exponential Smoothing Implementation in Python

I've written out a Triple Exponential Smoothing class, which incorporates what has been shown so far. The example above used additive trend and seasonal components, the class also has the multiplicative version.

Much like in the Double Exponential Smoothing tutorial, the smoothing factors are optimised using scipy.optimize.minimize to find the lowest error between the input timeseries and the smoothed series. This can be accomplished by call the fit() method on the class. A forecast into the future can then be made by calling the predict() method.

import numpy as np

import pandas as pd

from scipy.optimize import minimize

from scipy.spatial.distance import sqeuclidean

class TripleExponentialSmoothing:

"""

Triple Exponential Smoothing

Parameters

----------

timeseries : np.ndarray

1-dimensional numpy array to model

trend : str {'add', 'mul'}, default 'add'

Addititive or multiplicative smoothing for trend component

seasonal : str {'add', 'mul'}, default 'add'

Addititive or multiplicative smoothing for seasonal component

s_periods : int

Number of periods in the seasonal cycle

"""

def __init__(self, timeseries, trend='add', seasonal='add', s_periods=7):

self.ts = timeseries

self.n = len(self.ts)

self.m = s_periods

self.trend = trend

self.seasonal = seasonal

if (self.trend == 'mul' or self.seasonal == 'mul') and not np.all(

self.ts > 0):

raise ValueError(

"Elements in time-series must be >0 for multiplicative \

trend or seasonal models")

models = {

('add','add'): self._tes_add_add,

('add','mul'): self._tes_add_mul,

('mul','add'): self._tes_mul_add,

('mul','mul'): self._tes_mul_mul,

}

self._model = models[(trend, seasonal)]

def _tes_add_add(self, params, lvl, b, s):

alpha, beta, gamma = params

for i in range(1, self.n+1):

lvl[i] = alpha*(self.ts[i-1]-s[i-1]) + (1-alpha)*(lvl[i-1]+b[i-1])

b[i] = beta*(lvl[i]-lvl[i-1]) + (1-beta)*b[i-1]

s[i+self.m-1] = (gamma*(self.ts[i-1]-lvl[i-1]-b[i-1])

+ (1-gamma)*s[i-1])

return lvl, b, s, lvl[:-1] + b[:-1] + s[:-self.m]

def _tes_add_mul(self, params, lvl, b, s):

alpha, beta, gamma = params

for i in range(1, self.n+1):

lvl[i] = alpha*(self.ts[i-1]/s[i-1]) + (1-alpha)*(lvl[i-1]+b[i-1])

b[i] = beta*(lvl[i]-lvl[i-1]) + (1-beta)*b[i-1]

s[i+self.m-1] = (gamma*(self.ts[i-1]/(lvl[i-1]-b[i-1]))

+ (1-gamma)*s[i-1])

return lvl, b, s, (lvl[:-1]+b[:-1]) * s[:-self.m]

def _tes_mul_add(self, params, lvl, b, s):

alpha, beta, gamma = params

for i in range(1, self.n+1):

lvl[i] = alpha*(self.ts[i-1]-s[i-1]) + (1-alpha)*(lvl[i-1]*b[i-1])

b[i] = beta*(lvl[i]/lvl[i-1]) + (1-beta)*b[i-1]

s[i+self.m-1] = (gamma*(self.ts[i-1]-(lvl[i-1]*b[i-1]))

+ (1 - gamma)*s[i-1])

return lvl, b, s, (lvl[:-1]*b[:-1]) + s[:-self.m]

def _tes_mul_mul(self, params, lvl, b, s):

alpha, beta, gamma = params

for i in range(1, self.n+1):

lvl[i] = alpha*(self.ts[i-1]/s[i-1]) + (1-alpha)*(lvl[i-1]*b[i-1])

b[i] = beta*(lvl[i]/lvl[i-1]) + (1-beta)*b[i-1]

s[i+self.m-1] = (gamma*(self.ts[i-1]/(lvl[i-1]*b[i-1]))

+ (1-gamma)*s[i-1])

return lvl, b, s, lvl[:-1] * b[:-1] * s[:-self.m]

def _opt(self, *args):

"""Return squared error between timeseries and smoothed array"""

*_, smooth = self._model(*args)

return sqeuclidean(smooth, self.ts)

def _init_arrays(self):

m = self.m

lvl = np.zeros(self.n+1)

b = np.zeros(self.n+1)

s = np.zeros(self.n+m)

lvl[0] = self.ts[:m].mean()

if self.trend == 'add':

b[0] = (self.ts[m:2*m].sum()-self.ts[:m].sum()) / m**2

if self.trend == 'mul':

b[0] = (self.ts[m:2*m].sum()/self.ts[:m].sum()) ** (1/m)

if self.seasonal == 'add':

s[:m] = self.ts[:m] - lvl[0]

if self.seasonal == 'mul':

s[:m] = self.ts[:m] / lvl[0]

return lvl, b, s

def fit(self):

"""Find optimal values for the smoothing factors"""

lvl, b, s = self._init_arrays()

alpha_init = 0.5 / self.m

beta_init = 0.1 * alpha_init

gamma_init = 0.05 * (1-alpha_init)

params = [alpha_init, beta_init, gamma_init]

bounds = [(0,0.5), (0,0.5), (0,0.5)]

args = (lvl, b, s)

optimised = minimize(self._opt, params, args=args,

bounds=bounds)

self.opt_params = optimised.x

def predict(self, start, end):

"""

Return a forecast between the specified start and end points

Parameters

----------

start : int

Zero-indexed, point to begin forecasting

end : int

Index of final prediction

Returns

-------

forecast : np.ndarray

"""

lvl, b, s = self._init_arrays()

params = self.opt_params

lvl, b, s, smooth = self._model(params, lvl, b, s)

self.components = {'level': lvl, 'trend': b, 'seasonal': s}

h = end - self.n + 1

if h >= 1:

if self.trend == 'add':

if self.seasonal == 'add':

forecast = np.array([lvl[-1] + i*b[-1] + s[-(-i%self.m)-1]

for i in range(1, h+1)])

elif self.seasonal == 'mul':

forecast = np.array([(lvl[-1] + i*b[-1])*s[-(-i%self.m)-1]

for i in range(1, h+1)])

if self.trend == 'mul':

if self.seasonal == 'add':

forecast = np.array([lvl[-1]*b[-1]**i + s[-(-i%self.m)-1]

for i in range(1, h+1)])

elif self.seasonal == 'mul':

forecast = np.array([lvl[-1] * b[-1]**i * s[-(-i%self.m)-1]

for i in range(1, h+1)])

smooth = np.concatenate((smooth,forecast))

return smooth[start:end+1]

We'll now have a look at using this class to model monthly temperature data.

Northam Temperature Data

I've chosen the monthly mean maximum temperature recorded for Northam, WA. You can get dataset from the Bureau of Meteorology's Climate Data Online section or download it here.

northam = pd.read_csv('data/IDCJAC0002_010111_Data12.csv')

northam.head()

There are a few missing measurements, so we'll foward fill these with Pandas' ffill() function. Then slice the monthly columns and then flatten it into a one-dimensional array.

northam = northam.ffill() # Forward fill na values

ts = northam.loc[:118,'Jan':'Dec'].values.flatten()

import matplotlib.pyplot as plt

# Plot the temperature data

plt.figure(figsize=(12,4))

plt.plot(np.arange(1902,2021,1/12), ts)

plt.title('Northam - Monthly Mean Maximum Temperature')

plt.xlabel('Year')

plt.ylabel('Temperature(°C)')

plt.show()

Using the TripleExponentialSmoothing Class

We'll first create an instance of the class using additive trend and seasonal components. We'll then call the fit() method to optimise the smoothing factors.

tes = TripleExponentialSmoothing(ts, trend='add', seasonal='add',

s_periods=12)

tes.fit()

The optimal smoothing factors are found in the opt_params attribute, which are values for alpha, beta and gamma respectively.

tes.opt_params

If we want to forecast into the future, we call the predict() method. The start and end parameters are the index values of the forecast array, ie choosing start=0 and end=5 will return an array of length 6.

Below will forecast 24 months into the future.

h = 24

forecast = tes.predict(start=0, end=len(ts)+h-1)

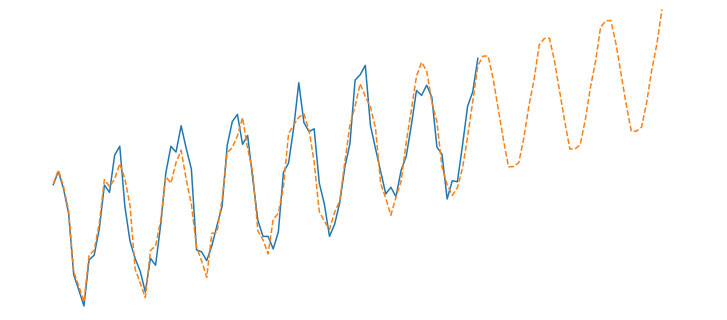

We can plot the last few years of temperature measurements along with the forecast, which which will continue 24 months into the future.

plt.figure(figsize=(12,5))

plt.plot(np.arange(2016,2021,1/12),ts[-5*12:],label='Actual')

plt.plot(np.arange(2016,2023,1/12),forecast[-7*12:],

linestyle='--',label='Forecast')

plt.title('Northam - Monthly Mean Maximum Temperature')

plt.xlabel('Year')

plt.ylabel('Temperature(°C)')

plt.legend()

plt.show()

Additive or Multiplicative Components

We have a choice between additive and multiplicative smoothing. If the data has values that are negative or zero, then we would be limited to additive smoothing only. But since the temperature is positive, we are free to choose either. Let's check the squared error for each combination.

trend_options = ['mul','add']

seasonal_options = ['mul','add']

for t in trend_options:

for s in seasonal_options:

tes = TripleExponentialSmoothing(ts, trend=t, seasonal=s, s_periods=12)

tes.fit()

forecast = tes.predict(0, len(ts)-1)

error = sqeuclidean(forecast, ts)

print('Trend=%s, Seasonal=%s, MSE=%.3e' % (t, s, error))

The multiplicative trend with additive seasonal option has the lowest error, but ever so marginally.

Changing Temperature Trend

The level, trend and seasonal components are stored in a dictionary in the components attribute. For example, we can access the final value for the trend component. When using a additive smoothing, a positive value for the trend would indicate rising temperatures over time, a negative value would be a falling trend.

tes.components['trend'][-1]

An interesting plot we could do is see how the trend component changed with time.

n = len(northam)

x = np.arange(1902,2021,1/12)

plt.figure(figsize=(12,4))

plt.title('Change in Trend Component')

plt.xlabel('Year')

plt.ylabel('Trend Component (°C)')

plt.plot(x, tes.components['trend'][:-1])

plt.plot(x, 0*x, color='gray', linestyle='--')

plt.show()

We can see that prior to around 1940, the temperature trend was negative (ie temperatures were falling). Since then it has plateaued and has remained relatively steady.